Criteria

for good measurement

The basic problem of scaling is the conversion of

qualitative data into quantitative one. The following are the procedures

included in the scaling.

· Have

thorough knowledge of the subject. This includes the ability to know what the

researcher wishes to measure. This step helps to determine the scalability of

the phenomenon under the study

· Decide

the characteristics of the respondents like age, sex, education, income,

location, profession etc

· Data Collection technique.

· Ensuring

goodness of measures that means the instrument developed to measure a

particular concept is indeed accurately

measuring the variable. i.e. we

------------------------------------------------------------------------------------------------------------------------

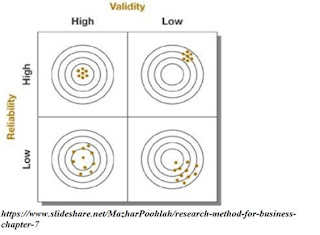

Reliability

·

Stability and consistency

with which the instrument measures the concepts and helps to assess the

goodness of a measure.

· A

measure is considered reliable if it would give the researcher the same

result over and over again.

·

Reliability is measured in two forms

§ Stability

of a measure is the ability of a measure to remain the same over time

§ Two

types of stability reliability

·

Test-retest reliability

·

Parallel-form reliability

§ Inter

item consistency reliability

· This

is a test of the consistency of respondents’ answers to all the items in a

measure.

· To

the degree that items are independent measures of the same concept, they will

be correlated with one another.

· The

most popular test of inter item consistency reliability is the Cronbach’s

alpha, 1946, which is used for multipoint-scaled items and the

Kuder-Richardson Formular, 1937, used for dichotomous items.

· The

higher the coefficients, the better the measuring instrument.

§ Split-half

reliability

· Split-half

reliability reflects the correlations between two halves of an instrument.

-------------------------------------------------------------------------------------------------------------------------

Validity of the research( Dudovsky, 2018)

· It

relates to the extent at which the survey measures right elements that need to be measured.

· It shows the degree to which an instrument

measures what it is intended to measure.

· There are two divisions of validity namely

internal validity and external validity.

§ It is also known as logical validity or Face

validity.

§ It

is used to know whether or not the study or test measures what it is supposed

to measure.

§ Content

validity ensures that the measure includes an adequate and representative set

of items that tap the concept. It is a function of how well the dimensions and

elements of a concept have been delineated.

§ it

is weakest form of validity

§ For example, IQ tests are supposed to measure intelligence.

The test would be valid if it accurately measured intelligence. Thus, a test

can be said to have face validity if it "looks like" it is going to

measure what it is supposed to measure.

§ Content validity is the extent to which an instrument

provides adequate coverage of topic under study. It can also be determined by

using a panel of persons who shall judge how well the measuring instruments

meets the standards.

§ It relates to the ability of the instrument to predict some

outcome or estimate the existence of some current condition.

§ The degree to which a measurement can accurately predict

specific criterion variables. It can tell us how accurately a measurement can

predict criteria or indicators of a construct in the real world.

§ It

measures how well one measure predicts an outcome for another measure. This

test is useful for predicting the performance or behaviour in another situation

(past, present or future). Example, A job

applicant takes an entry test during the interview process. If this test

accurately predicts how well the employee will perform on the job, the test is

said to have criterion validity. The first measure (Entry test) is called the

predictor variable or estimator. The second measure (future performance) is

called criterion variables as long as the measure is known to be a valid tool

for predicting outcomes.

§ Types of criterion related validity

·

Predictive

validity

·

Concurrent validity

When the predictor

and criterion data are collected at the same time, it can also refer to when a

test replaces another test (i.e. because its cheaper). For example, a written driver’s test replaces an in-person test

with an instructor.

§ It

relates to the assessment of suitability of measurement tool to measure the

phenomenon being studied.

§ The

degree to which a test measures what it claims to be measuring.

§ Shows to whether a scale or test measures the construct

adequately.

§ Construct (Variable) is actually an idea that is to be

translated into concrete through operationalization process. Construct validity

refers to whether the operational definition of a variable actually reflect the

true theoretical meaning of a concept.

§ It is a test of generalisation like external validity, but

it assesses whether the variable (construct) that are being tested for is

addressed by the experiment. For example, you

might design whether an educational program increases artistic ability amongst

pre-school children. Construct validity is a measure of whether your research

actually measures artistic ability, a slightly abstract label.

§ Subcategories of construct validity are convergent validity

and discriminant validity.

·

Convergent

validity

The degree to which a measure is correlated with other measures that it is theoretically

predicted to correlated with

· Discriminant validity

Tests whether concepts or measurements that are supposed to be unrelated

are, in fact, unrelated.

In the above example, based on theory, there are

two different constructs namely self esteem and locus of control having 2 items

each. Here there is discriminant

validity as the relationship between measures from different constructs is very

low. It can be said that the two sets of measures are discriminated from each

other.

-------------------------------------------------------------

Understanding Reliability and Validity

No comments:

Post a Comment

Share your ideas